Introduction: Shifting Intelligence Closer to the Source

Traditional cloud architectures often route all sensor and machine data to centralized servers for processing. While this model offers scalability, it can introduce latency and bandwidth constraints—especially in environments where split‑second decisions are critical. Edge computing moves data processing and analytics closer to devices, allowing engineering teams to act on real‑time information without relying exclusively on distant data centers. By placing compute resources at the “edge” of the network—on the factory floor, atop oil rigs, or within remote infrastructure—engineers gain immediate visibility and control, reducing reaction times and easing network loads.

Reducing Latency for Time‑Sensitive Applications

In many engineering contexts—such as robotics, autonomous vehicles, or process control—a delay of even a few hundred milliseconds can lead to product defects, safety risks, or inefficient operations. Edge nodes process sensor inputs and run analytics locally, returning actionable insights within milliseconds. For example, a robotic welding arm can adjust its trajectory in real time if onboard analytics detect misalignment, rather than waiting for a roundtrip to a central server. This local decision‑making minimizes cycle times and supports quality control protocols, ensuring machines respond to anomalies immediately and maintain optimal performance.

Optimizing Bandwidth and Network Traffic

Streaming raw data from thousands of sensors to the cloud can overwhelm network infrastructure and drive up connectivity costs. Edge computing allows preliminary filtering and aggregation at the source: only relevant events or summarized metrics traverse the network. An oil pipeline monitoring system might continuously record pressure and flow, but only transmit boundary‑exceeding events or hourly averages. By offloading routine analytics to local gateways, organizations conserve bandwidth for high‑priority alerts or in‑depth historical analysis, making long‑distance networks more reliable and cost‑effective.

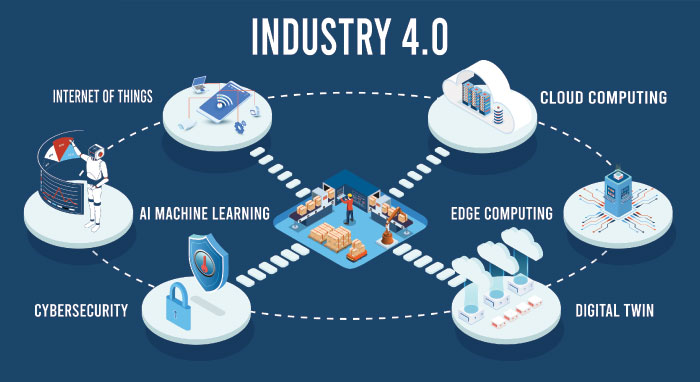

Supporting Hybrid Architectures

Edge computing complements—not replaces—cloud and on‑premises systems. A hybrid model leverages edge nodes for immediate processing while forwarding selected data to the cloud for deeper analytics, machine‑learning model training, and archival. Engineering teams can execute local control logic on edge devices, then periodically sync aggregated data sets to centralized platforms. This architecture preserves the scalability and collaboration benefits of the cloud—such as cross‑site dashboards and large‑scale simulations—while maintaining fast local responses and resilience when network connectivity is intermittent.

Data Management and Analytics at the Edge

Deploying analytics at the edge requires selecting the right software stack and hardware platforms. Lightweight analytics engines—often containerized—run on industrial PCs, gateways, or specialized edge appliances. Engineers design data pipelines that ingest raw sensor inputs, apply preprocessing (such as noise reduction or anomaly detection), and execute inferencing on pre‑trained models. Remotely updating those models ensures edge nodes remain aligned with evolving patterns (for example, new vibration signatures indicating wear). Effective data management at the edge thus balances computational load, storage constraints, and the frequency of synchronization with central repositories.

Ensuring Security and Compliance

Introducing compute nodes outside traditional data centers expands the attack surface. Engineering leaders must harden edge devices with secure boot processes, encrypted storage, and strict access controls. Network segmentation separates edge traffic from corporate IT systems, preventing lateral movement in case of a breach. Regular firmware and software updates—ideally automated through a trusted orchestration framework—keep edge nodes protected against emerging vulnerabilities. Additionally, compliance with industry standards (such as IEC 62443 for industrial cybersecurity) safeguards both operational integrity and regulatory adherence.

Integrating Edge into the Project Lifecycle

To realize edge computing’s benefits, teams should weave its implementation into existing engineering workflows. Early in the design phase, project managers identify use cases where latency reduction or bandwidth optimization yields the highest returns—such as closed‑loop control systems or remote monitoring sites. During prototyping, validation testbeds evaluate edge hardware in real conditions, measuring metrics like processing latency and network throughput. Once piloted successfully, deployment plans include standardized configuration templates and training modules, ensuring consistent roll‑out across diverse operational environments. Ongoing performance reviews capture lessons learned and guide incremental improvements.

Case Example: Smart Manufacturing Line

A mid‑sized automotive supplier retrofitted its assembly line with edge computing to improve defect detection. Vision cameras mounted above conveyor lanes feed images to edge servers running machine‑vision algorithms. When a weld joint deviates from specification, the system pauses the line and alerts technicians instantly, reducing scrap rates by 35 percent. Aggregated metadata—such as defect type, time stamp, and machine ID—is forwarded hourly to a cloud analytics engine, which refines detection models and informs maintenance schedules. This two‑tier approach combined rapid local reaction with centralized continuous improvement.

Looking Ahead: Edge and AI Convergence

As edge hardware becomes more powerful and energy‑efficient, advanced AI workloads—from deep‑learning inference to sensor fusion—will increasingly run on‑site. Emerging frameworks for federated learning will allow edge nodes to collaboratively refine models without sharing raw data, preserving privacy and reducing network strain. Furthermore, 5G and private wireless networks promise to bolster edge deployments with robust, low‑latency connectivity. For engineering professionals, staying abreast of these trends—and the tools that support them—will be key to maintaining competitive, resilient operations.

A Strategic Layer for Engineering Excellence

Edge computing introduces a strategic layer between devices and the cloud, offering engineering teams a pragmatic route to real‑time analytics, bandwidth efficiency, and hybrid IT integration. By thoughtfully selecting use cases, securing deployments, and embedding edge workflows into project lifecycles, organizations can achieve substantial gains in responsiveness and resource utilization. As edge and AI technologies continue to mature, the most forward‑thinking engineering teams will leverage this distributed paradigm to deliver smarter, safer, and more efficient solutions.